The Atlantic recently ran a story headlined “He Was Homeschooled for Years, and Fell So Far Behind.” It profiles Stefan Merrill Block, who was homeschooled in his early years and later struggled to catch up once he entered traditional schooling. But one rough experience doesn’t invalidate an entire movement that is delivering superior results for millions of families across the country.

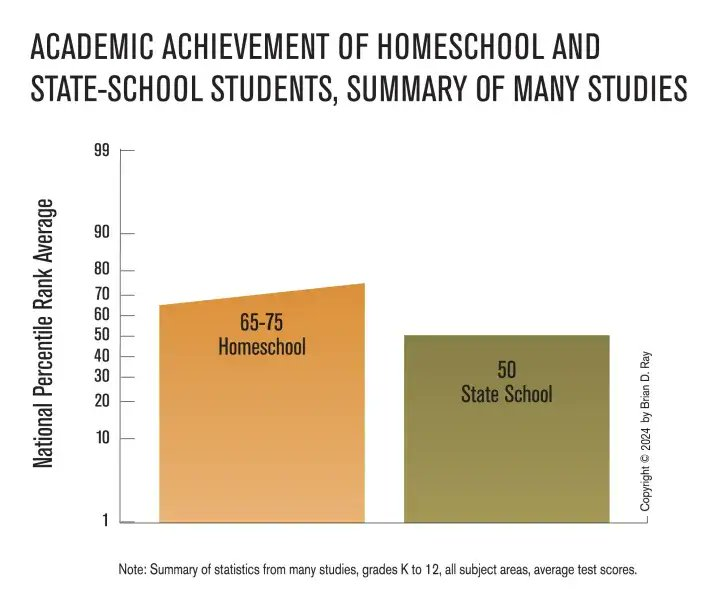

Homeschool students are outperforming kids in government schools by a wide margin. Brian Ray’s peer-reviewed systematic review in the Journal of School Choice examined dozens of studies on the topic. Seventy-eight percent of those studies found homeschoolers scoring significantly higher academically than their public school peers. They beat traditional school kids by 15 to 25 percentile points on standardized tests. These solid results hold up regardless of family background, income level, and whether the parents ever held a teaching certificate.

Government schools deliver exactly the opposite outcome. In Chicago alone, there are 55 public schools where not a single kid tests proficient in math. They spend about $30,000 per student each year and still fail to produce basic proficiency. The Nation’s Report Card shows nearly 80 percent of US kids aren’t proficient in math. That’s the real crisis staring us in the face, and it demands accountability from the system that claims to serve our children.

The critics who demand tighter rules on homeschooling never mention these disasters in public education. They won’t even consider shutting down the failing public schools that waste billions of taxpayer dollars and fail thousands of kids every year. But when families opt out and choose something better for their kids? Suddenly it’s time for government oversight and heavy-handed regulations. This double standard exposes the true agenda at play.

Teachers’ unions watch the collapse of academic achievement and never push for less funding. Every bad score just becomes another excuse for more cash grabs from the public. If they really thought homeschool was underperforming, they’d be calling for gobs of taxpayer money to fix it.

This logical inconsistency gives away the game: these groups are laser-focused on defending the government school monopoly at all costs. They want to keep other people’s kids locked in their failure factories so they can siphon as much money as possible away from families and into the system.

Census Bureau numbers confirm just how much the tide is turning. Homeschooling enrollment has at least doubled since 2019, and the growth shows no signs of slowing. COVID laid bare the dysfunction in government schools, from useless remote learning to radical ideologies in the classroom, and parents decided they had seen enough.

Randi Weingarten, the president of the American Federation of Teachers, even wants to take things a step further by officially partnering her union with the World Economic Forum to shape a national curriculum. That’s the future they envision — handing control of education to elites in Davos instead of trusting parents.

Even if the evidence showed homeschooling only matching the factory-model school system on average, the state would still have no legitimate authority to interfere. Kids don’t belong to the government. The Supreme Court made that crystal clear back in 1925 with Pierce v. Society of Sisters, ruling that “the child is not the mere creature of the State.” Oregon had tried to force every child into public schools, but the justices struck it down and affirmed parents’ fundamental right to direct their children’s education.

The Court reinforced this principle in Meyer v. Nebraska in 1923, protecting parents’ liberty to direct their children’s education, including striking down laws that banned foreign language instruction in private schools. Then, in Wisconsin v. Yoder in 1972, the justices sided with Amish parents who wanted to pull their children from high school to preserve their faith and community way of life. These landmark decisions enshrine parental rights as bedrock constitutional protections that no bureaucrat can simply override.

The state has the burden of proof when it comes to intervening in family life. Parents shouldn’t be forced to prove their innocence upfront just to educate their own children at home. Government should only step in with clear evidence of real abuse, and even then, the intervention must be narrow and targeted.

Envision government officials sitting at every family’s dinner table each week, inspecting meals “just in case” some parents aren’t feeding their kids right. That scenario would represent an obvious violation of our Fourth Amendment rights against unreasonable searches, and no one would stand for it. Yet that’s the invasive logic behind calls to regulate homeschooling as if every parent is a suspect.

History shows exactly where this path of centralized control leads. The Nazi regime banned homeschooling in 1938 with criminal penalties attached, all to create a monopoly on thought and ensure their authoritarian ideology took root in every young mind. America’s own compulsory government school system didn’t emerge from some noble tradition of freedom — it was imported from Prussia, modern-day Germany, and aggressively promoted here in Massachusetts by Horace Mann. The whole model was engineered to produce obedient soldiers and compliant factory workers, not independent thinkers who question authority.

Homeschoolers sidestep the school system’s ugliest realities altogether. They avoid the gangs, the drugs, the mindless conformity, the left-wing indoctrination, the social promotion, and the constant threat of violence that plague too many government institutions. An FBI report from 2025 documented 1.3 million crimes committed in schools over just a few recent years.

And let’s not forget the subject of The Atlantic’s own story. They concede that Stefan Merrill Block grew up to become a successful and educated author, complete with New York Times bestsellers to his name. Their highlighted “failure” case actually produced someone thriving in the real world. That undercuts the panic they’re trying to stoke.

Regulations have failed to fix the problems in public schools — they have often entrenched mediocrity and waste. Importing the same model into homeschooling would risk spreading those shortcomings rather than solving them. Many on the left are uncomfortable with the fact that they lack the same direct control over parents that they exercise over most school districts. That gap in authority has led some to push for sidelining families in favor of greater state oversight.

Parents know their children better than any distant bureaucrat ever could. Homeschooling delivers measurable results, saves taxpayers money, and upholds the core American value of freedom.

The Atlantic can publish as many cautionary stories as it likes, but the data, the Supreme Court precedents, and basic common sense remain firmly on the side of parental authority. It’s time to end the double standards and attack narratives and let families lead the way in educating the next generation.